1. Executive Summary

This report is a new study of reading, how it works, and how to achieve that mysterious state referred to as “readability”. It’s targeted in the first instance at electronic books, but is also relevant everywhere else that text is read.

If the ideas in this document work – and there are very strong signs that they will – they will change the world. That’s a grandiose claim. But reading is a core human task. We were not ready to implement the much-hyped “Paperless Office” in the 1970s and 1980s. The main obstacle to that vision was: How can you have a paperless office, when reading on the computer screen is so awful?

We are about to break through that barrier. And everything will change when we do.

I’ve read around 12,000 pages of research papers, books and articles over the past several months. The (hopefully logical) case that follows is almost an exact reversal of the discovery process that took place.

The top-level conclusions are:

- Pattern recognition is a basic behavior of all animals that became automatic, unconscious and unceasing to ensure survival.

- Humans have developed visual pattern recognition to a high degree, and human brain development has given priority to the visual cortex that is a key component of the recognition system.

- Pattern recognition is key to the development of language and especially writing and reading systems, which depend entirely upon it.

- The book is a complex technological system whose purpose is to Optimize Serial Pattern Recognition, so it can be carried on at a basic instinctive level, leaving the conscious cognitive processing of the reader free to process meaning, visualize and enter the world created by the writer. I call this system OSPREY.

- OSPREY is how books work, and the same optimization can be done algorithmically for electronic books and other computer screens by developing two new technologies, both of which are described in this paper:

- ClearType font display technology that can greatly improve the screen display of letter- and word-shapes, recognition of which lies at the heart of reading.

- An OSPREY reading engine that will automatically take structured content and display it according to OSPREY rules.

2. Introduction

This ongoing study into the readability of text on screen was carried out as part of Microsoft’s “Bookmaker” Electronic Books project.

If electronic books are ever to become an acceptable alternative to books in print, readability is the biggest single challenge they must overcome. We can deliver text on screen, and the computer offers significant potential advantages in terms of searching, adding active time-based media such as sound, carrying many different books in a single device, and so on.

But will electronic books be readable? Will people ever want to spend the same amount of time looking at a screen as they spend today reading a printed book?

People still don’t like to read even relatively short documents on screen, whereas they will happily spend many hours “lost” in a book. Unless we can make significant advances in readability, electronic books will be limited to niche markets in which early adopters are prepared to put up with relatively poor readability. Is it merely a question of waiting until screens get better?

Almost 15 years ago, I helped develop a hypertext product aimed at moving us towards the “Paperless Office”. As we know, the Paperless Office has so far been a complete bust; more paper is produced today as a result of the widespread adoption of the desktop computer than at any time in history.

The Paperless Office foundered on the same shoal as the first attempts to produce electronic books – poor screen readability, because reading is the core of everything we do. This paper, I hope, explains what went wrong, and how to fix it. The Paperless Office is now a real possibility. We can make it a reality.

2.1 First step: understand what works

To understand what went wrong, and how to fix it, the best place to start is by asking the question: “What went right?”

There is one undisputable fact: The Book works.

Boiled down to its essence, a book is basically sooty marks on shredded trees. Yet it succeeds in capturing and holding our attention for hours at a stretch. Not only that, but as we read it, the book itself disappears. The “real” book we read is inside our heads; reading is an immersive experience.

What’s going on here? What’s the magic?

Those questions are the starting-point of this study.

Although a great deal of readability and reading research has been done over the past couple of centuries, reading and how it works still remains something of a mystery.

One body of work has focused largely on typography and legibility. Another body of work has examined the psychology and physiology of reading. All the research so far has added valuable data to the body of knowledge. But it has failed to explain the true nature of reading and readability, possibly because it was the work of specialists, each with a strong focus in a single area such as psychology, physiology or typography.

I’m not a specialist, although I’ve been dealing with type for 30 years. This paper takes a generalist approach I believe is the key to understanding the phenomenon of immersive reading.

Some great work has been done on the specifics. But what has been lacking until now has been a way of tying all of this work together. Some important missing pieces were also missing from the puzzle. Writing, printing, binding books, and the human beings that read them together make up a “system”. Analyzing its parts does not reveal the whole picture.

2.2 A General Theory of Readability

This paper puts forward a “General Theory of Readability”, which builds on the findings of these different areas of research, and adds perspectives from the study of information processing and instinctive human behavior, to build a new unified model of the reading process. I believe this model gives new insight into the magic of the book; how it works, and why it works. And thus it tells us how to recreate that magic on the screen.

Something deep and mysterious happens when we read, intimately linked to human psychology and physiology, and probably even to our DNA.

The book as we know it today did not happen by chance. It evolved over thousands (arguably millions) of years, as a result of human physiology and the way in which we perceive the world. In a very real sense, the form of the book as we know it today was predetermined by the decision of developing humans to specialize in visual pattern recognition as a core survival skill.

The book is a complex and sophisticated technology for holding and capturing human attention. It is hard to convince people of its sophistication; there are no flashing lights, no knobs or levers, no lines of programming code (there really is programming going on, but not in any sense we’d recognize today…).

The conclusions in this document could have great implications for the future of books. But books are only an extreme case of reading – a skill we use constantly in our daily lives. Advances made to enhance the readability of books on the screen also apply to the display of all information on computer screens, inside Microsoft applications and on the Web.

This has been an amazing journey of exploration for me. The central question: “What’s going on here?” kept leading backwards in time, from printed books to written manuscripts, to writing systems, to pictures drawn on the walls of caves by prehistoric man, and eventually to primitive survival skills and behaviors we humans share with all other animal forms. At the outset, I had no idea just how far back I’d have to go.

2.3 What’s this got to do with software?

Some of the areas touched on in this report are pretty strange territory for a company at the leading edge of technology at the end of the 20th Century. But computer software isn’t an end in itself. We build it so people can create, gather, analyze and communicate information and ideas. Reading and writing are at the very heart of what we do. The difficulty that most people have in getting to grips with computers is a direct result of the fact that they force us to work in ways that are fundamentally different from the way we naturally perceive and interact with our world.

I came across the quote from Stanley Morison – one of the greatest and best-known names in the world of typography – only at the end of this current phase of work. Morison was talking about the design of new typefaces, but it is great advice for any researcher, in any field.

He is absolutely correct. Trying to get right back to the roots and basic principles involved in reading allows us to analyze the book and see it as a truly sophisticated technological system. And understanding how this technology hooks into human nature and perception makes it as relevant and alive today as when Johannes Gutenberg printed the first 42-line bible in Mainz more than five centuries ago, or when the first cave-dwellers drew the “user manual” for hunting on the walls of their homes.

Understanding the root-principle is key to taking text into the future. The computer can go beyond the book – but only if we first really understand it, then move forward with respect and without breaking what already works so well.

The basic principles outlined in this paper will allow us to focus future research on areas most likely to be productive, to develop specific applications for reading information on the screen, to develop testing methods and metrics so we can track how well we are doing, and to go “beyond the book”.

2.4 Why is this a printed document?

Ideally, this document should have “walked the talk”, and been in electronic format for reading on the screen, demonstrating the validity of its conclusions.

Unfortunately, no system today exists that can deliver truly readable text on the screen. We have a first, far-from-perfect implementation, which is constrained by the device on which it runs. It is already better than anything seen so far, and will improve dramatically over the next few months.

This paper, I hope, explains how to build the first really useable eBook, and defines its functionality. But there’s a lot more work to be done to make it real.

3. Detailed Conclusions

- One of the most basic functions of the human brain is pattern-recognition. We recognize and match patterns unconsciously and unceasingly while we are awake. This behavior developed for survival in pre-human (animal) evolution, and humans have developed it to a highly sophisticated level. It is coded into our DNA. The growth in size of the visual cortex in the human brain is believed to have resulted from the increasing importance to us of this faculty.

- The book has evolved from primitive writing and languages into a sophisticated system that hooks into this basic human function at such a deep level we are not even aware of it. The effect is that the book “just disappears” once it hooks our attention.

- The book succeeds in triggering this automated process because it is a “system” whose purpose is to Optimize Serial Pattern Recognition. From the outside it looks simple – not surprising, since it’s designed to become invisible to the reader. There are no bells, whistles, or flashing lights. But “under the hood” the technology is as complex as an internal combustion engine, and similarly it depends on a full set of variables that must be tuned to work together for maximum efficiency. Much previous research has failed to grasp this because of researchers’ tendencies to take the traditional path of attempting to isolate a single variable at a time. To gain full value from these variables requires first setting some invariable parameters, then adjusting complex combinations of variables for readability. I have called this complete system OSPREY (from Optimized Serial Pattern Recognition).

- OSPREY has an “S-shaped” efficiency curve. Readability improves only slowly at first as individual variables are tuned. But once enough variables are tuned to work together, efficiency of the system rises dramatically until eventually the law of diminishing returns flattens the curve to a plateau. Conversely, it takes only two or three “broken” or sub-optimal variables to seriously degrade readability.

- Reading is a complex and highly automated mental and visual process but makes no demands on conscious processing, leaving the reader free to distill meaning, to visualize, and to enter the world created by the writer. That world is in reality a combination of the writer’s creation and the reader’s own interpretation of it.

- Interaction with this technology changes the level of consciousness of the reader. A reader who becomes “lost” in a book is in a conscious state that is closest to hypnotic trance. OSPREY allows the reader to achieve this state of consciousness by reaching his or her own “harmonic rhythm” of eye movements and fixations that becomes so automatic the reader is no longer aware of the process.

All of the parameters and variables needed to achieve OSPREY are already known for print. They can be duplicated on the computer screen, but some technology improvements are required. Where the computer screen is weakest in relation to print is in the area of fonts and font rendering, which has the greatest effect on the way letter and word shapes are presented to the reader. In the course of this research, the author and others have carried out research in this area and have developed a new rendering technology (Microsoft® ClearType™) that greatly improves the quality of type on existing screens.

- OSPREY can be produced algorithmically, with no requirement for manual intervention. Now we have proved that ClearType™ works, all of the required technologies are known. But they have never been assembled into a full system and tuned for the screen with OSPREY goals in mind. Since no such complete systems for displaying text on screens have yet been built, screen display of text is currently on the “low efficiency” segment of the S-Curve. This explains why people prefer to read from paper than screen, especially for longer-duration reading tasks – a fact documented by many researchers, and by our own experiences.

- OSPREY technology will allow Microsoft to deliver electronic books that set new standards for readability on the screen. The technology can be folded back into mainstream Web browsing and other software to bring major improvements in the readability of all information on the screen.

- Many attempts have been made over the years to develop alternative methods of improving reading speed and comprehension. Examples include technologies such as Rapid Serial Visual Presentation (flashing single words on a computer screen at accelerated rates) that are claimed to greatly increase reading speeds. They have failed to gain acceptance because they do not take the holistic approach needed to achieve the OSPREY state, and fail to take into account the wide variations in reading speed shown by a single reader during the course of reading one book. However, the possibility remains that some new technology can be developed to revolutionize reading, and further research should be carried out to fully explore alternative approaches. Any such “revolutionary” technology will have to be extremely powerful and easy to learn and apply in order to succeed. Not only will it have to improve the immersive reading experience, it will then have to be widely adopted as a replacement for the current system, which has evolved over thousands of years into its current mature technology and is highly bound up with the nature of humans.

- We are now building an OSPREY reading engine from existing components and new technologies. For success, the team must continue to have a mix of software developers, typographers and designers. An important part of this project will be work on new fonts for reading on the screen, especially new font display technologies outlined in this paper to squeeze additional resolution from the mainstream display technologies which are likely to remain at or near their current resolution level for some years.

- Once an OSPREY system is built, we should carry out further research into cognitive loading – a way of measuring the demands that the reading process makes on our attention. We should compare cognitive loading values for the printed book, for current Web-based documents, and for tuned OSPREY systems. This research will validate the OSPREY approach and provide valuable data for optimal tuning of OSPREY systems. We must develop a range of metrics for immersive reading, and tools to track them.

- Using these measures will enable Microsoft to take the book to a new level that is impossible to achieve in print, and then apply the same technologies to all information. Understanding the basic OSPREY principles and implementing a system will enable us to use computer technology to enhance and reinforce the OSPREY effect without breaking it, for example by:

- Creating new typefaces and font technologies to enhance pattern-recognition, especially for LCD screens.

- Providing unabridged audio synchronized to the text so the reader can continue the story in places they would normally be unable to read – for example while driving – switching transparently between audio and display.

- Using subtle and subliminal effects such as ambient sound and lighting to reinforce the book’s ability to draw the reader into the world created by the author. (Subtle is the keyword here: effects must enhance the OSPREY state without disrupting it.) This utilizes the “Walkman Effect” to allow the reader to more quickly move from the physical world into the world of the book and keep her attention there by enhancing the book’s already-powerful capability to blank out distractions. At this point, this is merely a possibility; there is no proof that it will work, or that it might not run contrary to maintaining the flow of reading. This should be investigated in further research.

- Defining new devices or improving existing desktop PCs with displays tuned to the “sweet spots” which are identified by the OSPREY research. Documents can be formatted for these “sweet spots” and intelligently degrade to provide maximum readability on other devices. A key to this will be the implementation of “adaptive document technology” (Microsoft patent applied for) which will automatically reformat documents to be read on any device while still adhering as closely as possible to OSPREY principles within device constraints. This technology, and the devices that run it, will help drive the paradigm shift from the desktop PCs of today to the portable, powerful, information-centric devices of tomorrow.

4. Pattern recognition: a basic human skill

One of the most basic skills of living beings is pattern-recognition. It is a fundamental part of our nature, one we humans share with animals, birds, and even insects and plants.

Pattern recognition is a precursor to survival behavior. All life needs to recognize the patterns that mean food, shelter, or threats to survival. A daisy will turn to track the path of the sun across the sky. Millions of years ago, the dog family took the decision to specialize in olefactory and aural pattern-recognition. They grew a long nose with many more smell receptors, and their brains developed to recognize and match those patterns.

As our ancestors swung through the trees, a key to survival was the ability to quickly recognize almost-ripe fruit as we moved rapidly past it. (Unripe fruit lacked nutrition and caused digestive problems; but if we waited for it to become fully ripe, some other ape got there first…)

So we specialized in visual pattern recognition, and grew a visual system to handle it (including a cerebral cortex optimized for this task).

Pattern-matching in humans makes extraordinary use of the visual cortex, one of the most highly-developed parts of the human brain. Recognition of many patterns appears to be programmed at DNA level, as evidenced by the newborn human’s ability to recognize a human face.

In primitive times, we had to learn which berries were safe to eat, and which were dangerous. We had to learn to recognize movement using our peripheral vision, then use our higher-acuity focus to match the pattern to our “survival database” to evaluate whether it was caused by another ape (opposite sex for breeding purposes; same-sex, possible territorial battle) or a lion (predator: threat).

For our survival, this pattern recognition had to become unceasing and automatic. In computer terms, pattern matching belongs to the “device driver” class of program. It is activated at birth (maybe even at conception), and remains running in the background until we die, responding to interrupts and able to command the focus of the system when required.

Anyone who studies animal tracking and survival skills realizes at a very early stage that at the core of all these skills is pattern-recognition and matching.

Jon Young, a skilled animal tracking and naturalist who runs the Wilderness Awareness School in Duvall, WA, spent many years being mentored in tracking and wilderness skills by Tom Brown Jr., one of the best-known names in US tracking and wilderness skills circles.

Jon has studied the tracking and survival skills still used by native peoples all over the world, including Native Americans – who were (and in some cases, still are) masters of the art. He has documented how children begin to learn from birth the patterns essential to their survival.

For example, the Kalahari region of Africa is one of the most inhospitable parts of the world. There is almost no surface water for most of the year. Yet to the tiny Kalahari bushmen this is “home”, and provides all that they need to survive.

One of the first survival skills taught to the Bushmen’s children is how to recognize the above-ground “pattern” of a particular bush which has a water-laden tuber in its root system, invisible from the surface. If this is sliced, and the pulp squeezed, it provides a large quantity of pure drinking water.

All survival skills which involve animal tracking, or use of wild plants for food, medicines, clothing etc., are based on pattern recognition, and learning from birth the right database for the relevant ecosystem. Taking a Kalahari bushman and placing him in the Arctic would pose him a serious survival problem. An Eskimo transplanted to the Kalahari would have different but equally serious challenges.

There are patterns associated with wolf, domestic dog, wildcat, cougar, bear, squirrel, or mouse. Each has subtleties that enable the skilled tracker to recognize different events, such as an animal that is hunting, or running from a predator. There are even patterns within tracks which show when an animal turned its head to the side, perhaps to listen to a sound which means danger, or just to nibble a juicy shoot from a bush as it quietly grazed in the forest.

An expert in survival, such as a native or a well-trained woodsman, is one who has studied enough of the patterns of nature – the tracks of animals, the sounds of the birds, and so on – to have built a large “database” of patterns in his or her memory store.

“Nature provides everything we need to survive – and even thrive – in what we call the wilderness. All we have to do is learn to recognize it,” (: 1998).

It is also probably no coincidence that among first uses of symbols we have on record appear to be either records of (or how-to instructions on) hunting.

4.1 Pattern Recognition and Reading

What has all this to do with the life of modern man, and especially with reading? Well, most of us may have left the woods to live in towns and cities, but the woods have never left us. We still use this same survival trait of pattern recognition unceasingly and unconsciously in our daily lives. It’s hard-wired into the organism.

Pattern recognition is how we walk down a hallway without continually bumping into the walls. It’s how we stay on the sidewalk and out of the traffic on the roadway. It’s how we recognize each other. Pattern recognition still tells us where to find food – why else would McDonald’s be so protective of its corporate logo?

Modern civilization makes constant use of the fact that we continually pattern recognize and match. Corporate logos, freeway signs, “Walk/Don’t Walk” signals, and so on are all examples.

One of the most pervasive applications of our innate pattern recognition behavior is reading. We learn to read by first learning to recognize the basic patterns of letters. Then we learn to recognize the larger patterns of words. Once we have learned the pattern of the word “window”, we never again read the individual letters; the larger pattern is immediately matched as a gestalt. If we are skilled readers, we may learn to match patterns at phrase or sentence level, or perhaps in even larger units.

Reading is an amalgam of highly automated processes that include word recognition. Seen as a system the task of reading is simply serial pattern recognition. Patterns are recognized as symbols, groups of which are inferred to have meaning. Word recognition is the primary task of reading. In effect, the book takes our highly-tuned survival skill for a walk through a friendly neighborhood park, where almost all the people we meet are old friends whom we recognize immediately (depending on the level of challenge in the content). When we come across a new pattern, we are able to find its meaning (by consulting a dictionary or “pattern database”) and enter it into our memory of stored patterns as a new friend.

If reading, especially of longer texts like books, is analyzed in detail from this viewpoint, the “art” of typography and design can be shown to be a highly-sophisticated technology with a coherent underlying logic which is set up to make Serial Pattern Recognition as effortless as possible. The book is the embodiment of a technology of Optimized Serial Pattern Recognition.

In honor of its wilderness roots, I’ve called it OSPREY.

4.2 The Concept of Harmonic gait

There is another feature of animal tracks that is highly relevant to readability: the concept of harmonic gait.

Every animal has its own specific harmonic gait; the pattern in a group of successive tracks which the animal makes when in its normal relaxed state. Tracks are regularly spaced. In animals with four long legs, for example the dog, cat and deer families, the right rear paw or hoof lands directly on top of the print left by the right front paw or hoof. Trackers call this direct register.

When the animal is moving faster than normal, rear feet land ahead of front feet, until gait speeds up into a canter or gallop, and the pattern changes. When the animal is moving slower than normal, the rear feet land behind the impressions left by the front feet. But even these new patterns are regular and predictable.

Trackers use these regular gaits to analyze animal behavior. Changes in gait are clues to what the animal was doing. Speeding up normally indicates either predatory behavior (e.g. chasing the next meal) or trying to escape from a perceived threat (e.g. when the deer spots movement in its peripheral vision, and matches it to the pattern of “mountain lion”).

These regular gaits have another important use. If a tracker wants to find out where an animal is now, or where it went, he obviously has to follow its tracks. This is easy enough in soft sand, where tracks are deep and easy to see. But when the animal moves over rougher or harder ground, tracks are much harder to spot.

If the tracker knows the animal’s gait, he can predict with reasonable certainty exactly where the next track is likely to be found. He can narrow his search for the next print to the most likely area, find it quickly even if its traces are faint, and confirm the animal’s direction of movement. By using gait measurements (with a “tracking stick” easily made from a fallen branch), trackers can continue to follow the animal in conditions that would otherwise make tracking extremely difficult, if not impossible.

The regular rhythm of the gait acts as a cue to the tracker, telling him exactly where the next pattern-recognition task will take place. The relevance of this will become apparent when we look at typography later in this paper. Books do exactly the same by controlling the pace at which the words are presented and allowing the reader to move through the content at his or her own harmonic or natural gait (which readers change all the time in the course of reading).

The book presents each reader with level ground over which he or she can move at their own pace.

5. The Concept of “Ludic” Reading

A key term that may be unfamiliar to readers of this study is ludic reading. The term was coined in 1964 by reading researcher W. Stephenson, from the Latin Ludos, meaning “I play”. A ludic reader is someone who reads for pleasure.

Many of the conclusions of this paper are reached as a result of examining the technology of the printed book in conjunction with research carried out by psychologists into reading, especially ludic reading. This is clearly the most relevant form of reading to the eBook.

Ludic reading is an extreme case of reading, in which the process becomes so automatic that readers can immerse themselves in it for hours, often ignoring alternative activities such as eating or sleeping (and even working).

A major shortcoming of most of the research carried out into readability over the last hundred or more years is that it has focused, for practical reasons, on short-duration reading tasks. Researchers have announced (with some pride) that they have used “long” reading tasks consisting of 800-word documents in their research.

Compare this with the average “ludic reading” session. Even at the low (for ludic readers) reading speed of 240 words per minute (wpm), a one-hour reading session – which in the context of the book classifies as a short read – the reader will read some 14,400 words.

For very short-duration reading tasks, such as reading individual emails, readers are prepared to put up with poor display of text. They have learned to live with it for short periods. But the longer the read, the more even small faults in display, layout and rendering begin to irritate and distract from attention to content.

The consequence is that a task that should be automatic and unconscious begins to make demands on conscious cognitive processing. Reading becomes hard work. Cognitive capacity normally available exclusively for extracting meaning has to carry an additional load.

If we are trying to read a document on screen, and the computer is connected to a printer, the urge to push the “print” button becomes stronger in direct proportion to the length of the document and its complexity (the demands it makes on cognitive processing).

The massive growth in the use of the Internet over the past few years has actually led to an huge increase in the number of documents being printed, although these documents are delivered in electronic form which could be read without the additional step of printing. Why? Because reading on screen is too much like hard work. People use the Internet to find information – not to read it.

Research into ludic reading is especially valuable to the primary goal of this study, finding ways of making electronic books readable. If eBooks are to succeed, readers must be able to immerse themselves in reading for hours, in the same way as they do with a printed book.

For this to happen, reading on the screen needs to be as automatic and unconscious as reading from paper, which today it clearly is not.

If we can solve this extreme case, the same basic principles apply to any reading task.

5.1 Ludic Reading Research

“It seems incredible, the ease with which we sink through books quite out of sight, pass clamorous pages into soundless dreams”, Fiction and the figures of life, , 1972.

This passage is quoted in the introduction to “Lost In A Book”, written by Victor Nell, senior lecturer and head of the Health Psychology Unit at the University of South Africa.

Nell’s work is unusual and significant because it concentrates wholly on ludic reading, and details the findings of research projects carried out over a six-year period to examine the phenomenon of long-duration reading.

It looks at the social forces that have shaped reading, the component processes of ludic reading, and the changes in human consciousness that reading brings about. Nearly 300 subjects took part in the studies. In addition to lengthy interviews, subjects’ metabolisms were monitored during ludic reading. The data collected gives a remarkable insight into the reading process and its effect on the reader.

For anyone interested in reading research, this book is worth reading in its entirety. I’ll try to summarize the main points, then develop them. I’ve devoted a whole section to this book, because it’s such a goldmine of data.

Reading books seems to give a deeper pleasure than watching television or going to the theater. Reading is both a spectator and a participant activity, and ludic readers are by and large skilled readers who rapidly and effortlessly assimilate information from the printed page.

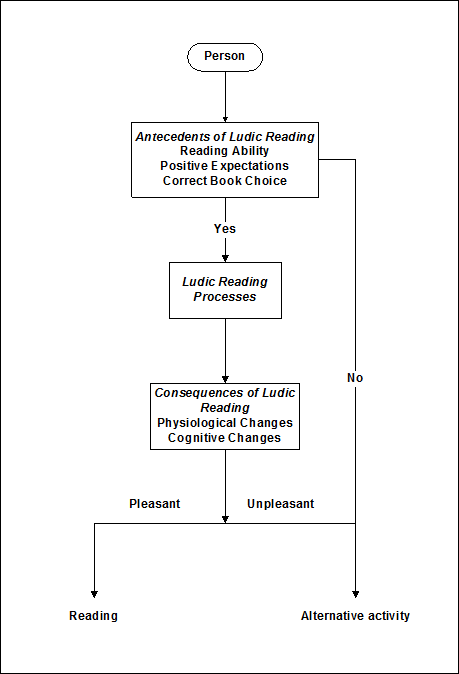

Nell gives a skeletal model of reading, then develops it during the course of the book.

5.2 The requirements of Ludic Reading

Nell gives three preliminary requirements for Ludic reading: Reading Ability, Positive Expectations, and Correct Book Choice. In the absence of any one of these three, ludic reading is either not attempted or fails. If all three are present, and reading is more attractive than the available alternatives, reading begins and is continued as long as the reinforcements generated are strong enough to withstand the pull of alternative attractions.

Reinforcements include physiological changes in the reader mediated by the autonomic nervous system, such as alterations in heartbeat, muscle tension, respiration, electrical activity of the skin, and so on. Nell and his co-researchers carried out extensive monitoring experiments on subjects’ metabolic rates, and collected hard data showing metabolism changes in readers as they became involved in reading.

These events are by and large unconscious and feed back to consciousness as a general feeling of well-being. (my italics). This ties in well to how book typography has developed to make automatic and unconscious the word recognition aspect of the reading process, which we will examine later in this paper.

In the reading process itself, meaning is extracted from the symbols themselves and formed into inner experience. It is clear that the ability of the content to engage the reader (the “quality” of writing), the reader’s consciousness, social and cultural values and personal experiences all play a part in this process.

Nell says the “greatest mystery of reading” is its power to absorb the reader completely and effortlessly, and on occasion to change his or her state of consciousness through entrancement.

Humans can do many complex things two or more at a time, such as talking while driving a car. But one of these pairs of behaviors is highly automatized, so only the other makes demands on conscious attention.

However, it is impossible to carry on a conversation or do mental arithmetic while reading a book. The more effortful the reading task, the less we are able to resist distractions and the more mental capacity we have available for other tasks, such as listening to the birds in the trees or other forms of woolgathering.

One of the most striking characteristics of Ludic reading is that it is effortless; it holds our attention. The Ludic reader is relaxed and able to resist outside distractions, as if the work of concentration is done for him by the task.

The moment evaluative demands intrude, ludic reading becomes “work reading”.

5.3 Highly-automated processes

Skilled reading is an amalgam of highly-automated processes: word recognition, syntactic parsing, and so on, that make no demands on conscious processing and the extraction of meaning from long continuous texts.

Although reading uses only a fraction of available processing capacity, it does use up all available conscious attention. Furthermore ludic reading, which makes no response demands of the reader, may entail some arousal, though little effort.

The term reading trance can be used to describe the extent to which the reader has become a “temporary citizen” of another world – has “gone away”.

“Attention holds me, but trance fills me, to varying degrees with the wonder and flavor of alternative worlds. Attention grips us and distracts us from our surroundings; but the otherness of reading experience, the wonder and thrill of the author’s creations (as much mine as his), are the domain of trance.”

“The ludic reader’s absorption may be seen as an extreme case of subjectively effortless arousal, which owes its effortlessness to the automatized nature of the skilled reader’s decoding activity; which is aroused because focused attention, like other kinds of alert consciousness, is possible only under the sway of inputs from the ascending reticular activating system of the brainstem; and which is absorbed because of the heavy demands comprehension processes appear to make of conscious attention.”

5.4 Eye movement

Reading requires two kinds of eye movements: saccades, or rapid sweeps of the eye from one group to the next, and fixations, in which the gaze is focused on one word group.

Reading speeds vary. There is a neuromuscular limit of 800-900 words per minute. Intelligent readers cannot fully comprehend even easy material at speeds above 500-600wpm. The average college student reads at 280wpm, and superior college readers at 400-600wpm. Skilled readers read faster than passages can be read aloud to them.

Even skilled readers pick up information from at most eight or nine character spaces to the right of a fixation, and four to the left. Fixation duration is dependent on cognitive processing (i.e. is determined by the difficulty or complexity of the material being read).

Findings of a sophisticated study (, 1980) discredit the widely-held view that the saccades and fixations of good readers are of approximately equal length and duration, or that reading ability is improved by lengthening saccade span and shortening fixation duration.

Perceptually-based approaches to the improvement of reading speed (increasing fixation span, decreasing saccade frequency, learning regular eye movements, reading down the center of the page, and so forth) are unsupported by studies which, on the contrary, show skilled readers do not use these techniques.

Studies show all book readers also read newspapers and magazines, the converse does not apply.

Ludic readers read at wildly different rates, Nell’s study found the fastest read at five times the speed of the slowest.

The reading speed of each individual varied just as dramatically in the course of reading a book. One reader moved between a fastest speed of 2 214wpm and 457wpm, while the average across the study group was a ratio of 2.69 between fastest and slowest speeds.

Nell found readers “savor” passages they enjoy most – often rereading them – while often skimming passages they enjoy less.

These last two findings suggest that any external attempt to present information at a pre-determined speed is doomed to failure, even if the reader is allowed to set presentation speed at their own average reading rate. The only method of controlling presentation rate which offers any hope of success would be to very accurately track the reader’s eye movements and link presentation rate to that.

Pace control is one of the reader’s “reward systems”, and terms such as savoring, bolting and their equivalents are accurate descriptions of how skilled readers read.

5.5 Convention – or optimization?

“The appearance of books has changed very little in the five centuries since the invention of printing. Lettering has always been black on white, lines have always marched down the page between white margins in orderly left-and-right-justified form, and letterspacing has always been proportional”.

Nell refers to these and other typographic features as print conventions, which have exhibited extraordinary stability. Considered together with the unchanging nature of perception physiology, he says, they make ’s Legibility of Print (1963) appear to be the last word on the subject, although many technological changes have caused legibility problems. For example, in many of these technologies, word and letter spacing is less tightly controlled; letters may be fractionally displaced to the left or right to create the illusion of a word space, thus compelling the reader’s eye to make an unnecessary regression. Poor letter definition and low contrast, distortion of letters and words are also cited as contributing to poor legibility.

Extraordinary stability is a key observation. It suggests that these are not merely print conventions, but optimizations that have stood the test of time. What worked, survived. What did not work disappeared. Survival did not happen because so-called conventions were easier for the printer (in fact, the reverse is the case), but because they are tuned to the way in which people read. Good typesetting requires much more work and attention to detail than bad typesetting. But bad typesetting is not acceptable to readers.

Nell describes these and other effects of the developing technology as “onslaughts on ease of reading”.

Ludic readers seek books which will “entrance” them; the reader’s assessment of a book’s trance potential is probably the most important single decision in relation to correct book choice, and the most important contributor to the reward systems that keep ludic reading going once it has begun.

Reader’s judgements of trance potential over-ride judgements of merit and difficulty. ’s Lord of the Rings (1954) is a relatively difficult book, but many readers prefer it to easier ones because of its great power to entrance. Best-sellers are entrancing to large numbers of readers.

Nell undertook a large and complex study of the physiology of reading trance using as his subjects a group of “reading addicts”.

5.6 Reading and Arousal

During reading, brain metabolism rises in the visual-association area, frontal eye fields, premotor cortex and in the classic speech centers of the left hemisphere.

Reading is a state of arousal of the system. Humans like to alternate arousal and relaxed states. Sexual intercourse is high arousal followed by postcoital relaxation; reading a book in bed before going to sleep uses the same arousal/relaxation mechanism – reading before falling asleep is especially prized by ludic readers.

This suggests an electronic book (eBook) had better be able to cope with being dropped off the bed! It also suggests that a backlit book, with no need to have a reading light – and perhaps keep a partner from falling asleep – is a positive benefit of the technology. Reaction to early backlit eBook prototypes confirms this is an attractive feature.

Ludic reading is substantially more activated than the baseline state. Immediately following reading, when the reader lays down the book and closes her eyes, there is a “precipitous decline” in arousal, which affects skeletal muscle, the emotion-sensitive respiratory system and also the autonomic nervous system.

Perversely, the ludic reader actually misperceives the arousal of reading as relaxation – they perceive effortlessness, although substantial physiological arousal is actually taking place.

During ludic reading, heart rate decreases slightly. This indicates that the cognitive processing demands made by ludic reading are not high. Reduced heart rate suggests that the brain is not working hard, which would demand increased blood supply, therefore increased heart rate.

This finding that reading involves arousal is highly significant; it suggests a strong parallel between the level of awareness we achieve while reading and the level of awareness required to survive in primitive times. In effect, we are taking an automatic skill developed for survival for a “walk in a neighborhood park”, during which we meet many old friends (words we know) and make some new ones.

Reading is a form of consciousness change. The state of consciousness of the ludic reader has clear similarities to hypnotic trance.

Both have three things in common: concentrated attention, imperviousness to distraction, and an altered sense of reality.

Consciousness change is eagerly sought after by humans, says Nell, and means of attaining it have been highly prized throughout history – whether through alcohol, mystic experiences, meditative states, or ludic reading. “Of these, ludic books may well be the most portable and most readily accessible means available to us of changing the content and quality of consciousness. It is also under our control at all times”.

There are two reading types: Type A, who read to dull consciousness (escapism) and Type B, who read to heighten it. Type A read for absorption, Type B for entrancement.

Automatized reading skills require no conscious attention. This suggests that any distractions on the page which require the reader to make conscious effort (for example, poorly-defined word shapes, difficulty in following line breaks, etc.) will greatly detract from the experience.

Ludic readers report a concentration effort of near zero for ludic reading, climbing steeply through work reading (39 percent) to boring reading (67 percent).

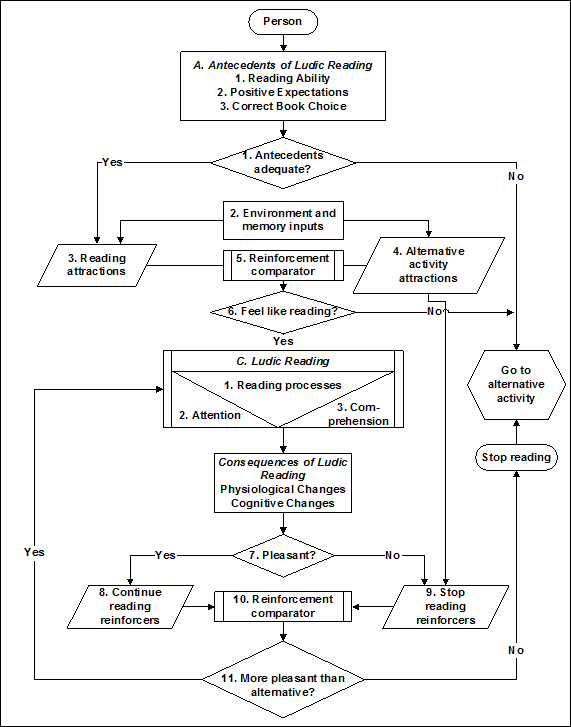

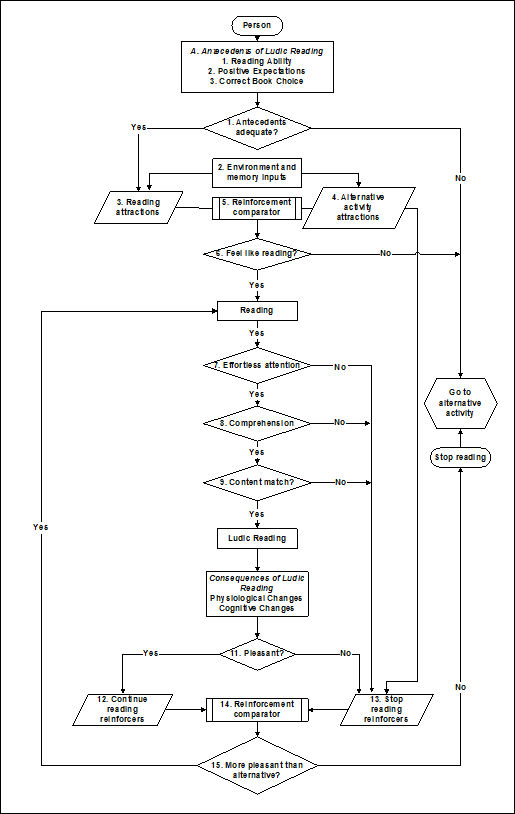

At the end of the book, Nell draws together the threads of his research to build a motivational model of reading, reproduced below.

5.7 An expanded model

While this model sheds a great deal of light on the motivational aspect, it does not include a detailed examination of the “ludic reading process”, which is portrayed as a “black box”. For researchers who wish to examine the process itself in more detail, Nell cites the complex information processing model of reading developed by Eric Brown ( (1981). A Theory of Reading. Journal of Communication Disorders).

Brown’s model, which takes up five separate pages, documents the true complexity of the reading process. Brown suggests that, contrary to previous theories that there are at least two different types of reading – phonemic and semantic – there is really only one, but that it is realized by fluent adult readers to a greater or lesser extent.

However, while an extremely complex sequence of events does take place in the reading process, it is normally automatic and involuntary.

Between Nell’s “black box” of the reading process, and Brown’s highly complex one, there is some middle ground which is worth exploring.

Expanding Nell’s “black box” only slightly gives a new, and I believe valuable, picture of the motivational model of reading.

There are at least three additional decision points that need to be added.

5.8 Additional decision points

The three additional decision points consist of:

- Degree of Effort. The reader carries out continuous subjective evaluation of the effort she is expending to read the book or document. The key to ludic reading, as put forward by Nell, is that it requires subjectively effortless attention. The process is in fact a state of arousal of the system, not a state of relaxation. But the reader perceives that she is relaxed, and the perceived effortlessness of the task is a key to this subjective feeling of relaxation. Once reading starts to feel like hard work – reading a hard passage, reading material on which the reader will later have to answer questions, or straining to read poor typography – the perception of effort augments the “stop reading reinforcers”. Once perceived effort passes a certain threshold value, the reader will simply stop reading.

- Comprehension. Evaluation is taking place continuously (Am I understanding this content?). The reader will certainly put in effort to comprehend difficult reading material (reading “broadens the mind”), but again there is a subjective threshold value. If it becomes too hard, reading will stop.

- Content match. (Am I enjoying this book?) Nell’s model suggests this is a once-for-all decision covered by Correct Book Choice in the Antecedents of Ludic Reading element of his model, but in reality this evaluation must also be continuous, with a threshold value which if exceeded will also result in the reader ceasing to read. How many of us have started a book and failed to finish it because it did not engage us?

Electronic books have a level playing-field with printed books in relation to comprehension and content match, provided publishers and developers ensure the same kinds of content is available on screen as can be found today in any successful bookstore.

Best-selling novels and best-selling authors achieve their success because the level of comprehension and their content is matched to the comprehension and “absorption criteria” of the general book-reading population. When I buy an espionage novel by English novelist Anthony Price, I know before I begin that this author is able to consistently engage me with characters and plot. I have positive expectations based on his track record with me. I already “know” (have my own internal model) of many of the central characters. These are old friends, who make few demands on my cognitive capacity.

It is in the area of effortless attention that eBooks – and all electronic documents – face their biggest challenge. It’s harder to read on the screen today than it is to read print.

5.9 Flow theory and the reading process

Nell’s analysis of ludic reading meshes extremely well with work on flow theory by researchers in recent years, the best-known of whom is Professor Mihalyi Csikszentmihalyi of the Department of Psychology at the University of Chicago.

(For readers who, like me, have trouble with his name, it’s pronounced “chick-sent-mee-high”: I’m grateful to the magazine article that thoughtfully included the pronunciation, thereby removing a major obstacle from verbally quoting the author’s work…)

, in his US best-seller Flow: The psychology of optimal experience, details how focused attention leads to changes in our state of consciousness.

Attention can be either focused, or diffused in desultory, random movements. Attention is also wasted when information that conflicts with an individual’s goals appears in consciousness.

What is the goal of the reader? To become immersed in the content. In this context, any information that takes conscious attention detracts from the reading experience. As Csikszentmihalyi says, “…it is impossible to enjoy a tennis game, a book, or a conversation unless attention is fully concentrated on the activity”.

He is even more specific later in his book, categorizing reading specifically as one of the activities capable of triggering the “flow state” by concentrating the attention.

“One of the most universal and distinctive features of optimal experience… is that people become so involved in what they are doing that the activity becomes spontaneous, almost automatic; they stop being aware of themselves as separate from the actions they are performing”.

He details activities designed to make the optimal experience easier to achieve: rock climbing, dancing, making music, sailing etc. In its most powerful form, the book, reading falls into that same category, as we will show later in this paper. The book is designed to capture human attention.

5.10 “On a roll”

Another researcher’s perspective on the flow experience appears in the paper A theory of productivity in the creative process (, 1986), which examined how computer programmers achieve the state of maximum efficiency and creativity we call “being on a roll”.

The key to achieving the “roll state” is that concentration is not broken by distractions. “Interruptions from outside the flow of the problem at hand are particularly damaging … because of their unexpected nature”.

This data on the flow experience will resonate when we come to consider the psychology and physiology of reading and the typographic analysis in subsequent sections of this paper.

6. Previous Reading Research

6.1 The Reading Process: physiology and psychology

Reading is a complex physiological and psychological process involving the eyes, the visual cortex, and both sides of the brain. Memory is key to reading, from the simple and mundane act of recognizing a single letter, to comprehending a whole sentence or passage of text. (, 1983: The Psychology of Reading)

Reading psychology and physiology are tied inextricably to the development of human language and writing systems. Methods of printing books and documents were a groundbreaking development only in that they enabled mass production of what had previously been a manual task requiring perhaps years of labor by a scribe.

By the time printing systems appeared, writing was already a very mature technology. Johannes Gutenberg was not the “Thomas Alva Edison” of writing systems. He was the “Henry Ford”, who worked out how to turn what was previously a hand-built technology into a system for mass production.

The writing system itself remained basically unchanged. In fact, the first typefaces were designed to emulate as nearly as possible the calligraphy of scribes.

Writing and reading were a natural outgrowth of the human instinct for pattern-recognition. Pictures were drawn to represent animals and other objects as early as 20,000 BC – the Stone Age. Reading and writing systems were in existence in North Babylonia 8000 years ago. Alphabet signs were used in Egypt at least 7000 years ago.

A detailed history of the evolution of reading and writing (also one of the earliest and most widely quoted works on the psychology and physiology of reading) is found in The Psychology and Pedagogy of Reading (, 1915).

6.2 How we read

A huge amount of work has been done, and many books and scientific papers have been written, on how we read. Researchers have dived down into incredible levels of detail, and several different models of how memory works in reading have emerged. There are disputes about the roles of long- and short-term memory for example.

However, all researchers agree that the primary task in reading is pattern recognition. There are disputes about the length of patterns we recognize – individual letters, whole words, groups, phrases and sentences – and how these are assembled, parsed and given meaning by the human mind. But all agree we recognize patterns and then mentally process them in some way.

The traditional approach to teaching reading was to first teach the alphabet of letters, then teach words. Other systems have emerged which concentrate first on whole words.

While the letter-then-word system held sway for languages with alphabets, in languages with logographies such as Chinese, the method of teaching is based on learning words first – since a single character is a word or phrase. Later, children learn the meanings of the component parts or strokes of those characters.

Teaching of reading in alphabet-based systems has moved towards the latter model in past decades, focusing more on words than the basic alphabet, which is learned in the process.

Taylor and Taylor suggest both letter- and word-recognition theories are valid. Poor readers often do not progress beyond the stage of having to identify individual letters before they can recognize a word. Even the adept reader who comes across an unfamiliar word will fall back to recognizing word-parts and even single letters. Ability to use words rather than letters as a unit increases with age and reading skill.

6.3 Saccades and fixations

French oculist Emile Javal in 1906 made the surprising discovery that we read, not with a smooth sweep of the eyes along a line of print, but by moving our viewpoint in a series of jumps or saccades and carrying out recognition during pauses or fixations.

The reader focuses the image of the text upon the retina, the screen of photosensitive receptors at the back of the eyeball. The retina as a whole has a 240-degree field of vision, but has its maximum resolution in a tiny area at the center of the field called the fovea which is only about 0.2mm in diameter. Foveal vision has a field of only one or two degrees at most . Huey suggested its field of vision was only about 0.75 of a degree of arc. Outside the fovea is the parafovea, three millimeters in diameter and with a field of around ten degrees. From there vision becomes progressively less clear all the way out to the periphery of the retinal field.

Target words are brought into the fovea by a saccade. After information is acquired during a fixation, another saccade moves to the next target word. Occasionally, the eyes jump back to a previous word for clarification of incomplete perception (or in some cases, just to enjoy a particular passage a certain time, or to help with semantic understanding of a complex passage).

Information is gathered by foveal vision. Parafoveal vision is used to determine locations of following fixations.

These eye movements are under constant cognitive control.

6.4 Shape and rhythm are critical

Readers learn to recognize words, not letters, although individual letters can help word recognition.

Thus the shapes of letters, and the way they are assembled together into words, are critical to ease of reading.

Huey makes it clear that the way in which the stream of words is presented to the reader’s eye is also critical. “Lines of varying length lead to a more cautious mode of eye movement… and may cause unnecessarily slow readers”. Elsewhere, he says “…when other conditions are constant, reading rates depend largely upon the ease with which a regular, rhythmical movement can be established and sustained.”

Letter shapes and the way they are assembled into words and presented to the reader is the domain of typography.

In the next section we will examine how typography has developed to take advantage of the instinctive human behavior of pattern recognition. We will show how the properly typeset book is a sophisticated yet largely invisible technology deliberately constructed to hook human attention by making this pattern recognition process automatic and unconscious.

Barriers to effective reading. Huey suggests that bad lighting and bad posture are the two most common causes of reading fatigue.

Too great a distance between desk and seat causes problems, and correct reading angle – which must be matched to the height of the reader – is also necessary. Consider the difference between reading a book (normally held at an angle of 45-degrees) and reading from today’s CRT computer monitors (which place text at a 90-degree angle to the reader). This is an effective argument for a tilting screen which can be placed below the reader’s sight horizon, as seen in the latest flat-panel LCD displays, or for an eBook which can be easily held in the hand or placed on a tilting stand.

6.5 Typographic Research

A huge amount of typographic research has been conducted this century, most of it related to legibility in print. The most prolific of the typographic researchers has been without question Professor Miles Tinker of the University of Minnesota, who with his colleague Donald Paterson published dozens of research papers and a number of books summarizing experiments with thousands of subjects. By 1940, Tinker and Paterson had already given speed of reading tests Tinker had devised to 33,000+ subjects, and he and Paterson continued to work in this field for more than 20 years.

Tinker attempted to evaluate all of the variables in turn: typefaces, type sizes, line length, leading, etc.. In many cases, he reached conclusions that can serve as fixed guidelines for setting readable type. Many of these seem relatively obvious in retrospect, but they have value since they are confirmed by scientific data. However, it must be continually kept in mind that Tinker’s testing was on relatively short passages. Small differences in reader preferences that might be acceptable in shorter reading tasks are likely to become magnified the longer the duration of reading.

The most complete summary of their work is contained in Legibility of Print (1963).

For example:

Typeface. Typefaces in common use are equally legible. Tinker cites faces such as Scotch Roman, which was in widespread use at that time for school textbooks.

Readers prefer a typeface that appears to border on “boldface”, such as Antique or Cheltenham. Sanserif faces are read as rapidly as ordinary type, but readers do not prefer it.

Type style. Italics are read slower than ordinary lower-case roman. While bold type is read at the same speed as roman, seventy percent of readers preferred ordinary lower case. So neither italics nor boldface should be used for large amounts of text, but should be kept for emphasis only.

Type size. 11-point type is read significantly faster than 10 point – but 12 point was read slightly more slowly. 8-and 9-point types are significantly less readable, and once the type size rises to 14 points, efficiency is again reduced. This finding is extremely important when it comes to designing books to be read on the screen, since displaying type on screen at sizes anywhere below 14-point presents technical difficulties due to poor screen resolution. This key issue is addressed later in this paper in describing a new innovative display technology capable of solving these difficulties even on the screen resolutions of today.

Line length. Standard printing practices of between eight and 12 words to the line are preferred by readers. Relatively long and short lines are disliked.

Leading. Readers definitely prefer type set with “leading” or additional space between lines. 10-point type, for instance, is preferred with an additional two points of leading added between the lines. More leading than this begins to counter the beneficial effect.

As a general principle, at body text sizes, an additional 20 percent of space should be added, although type size, leading and line length are inter-related variables, none of which can be designed in isolation.

Tinker defines a series of “safe zones” or effective combinations for type sizes from 6 to 12 points.

Page size and margins. Tinker makes no recommendation on page size other than calling for publishers, printers and paper manufacturers to agree on standards. This suggests that the page sizes in common use are satisfactory. The experiments on line length confirm this. Tinker’s experiments showed that readers preferred material with margins, although experimental work showed material without margins was just as legible. This is one of the areas where Tinker’s testing techniques using relatively short-duration reading tasks may well mask a deeper effect which in short-duration tasks is expressed only as a reader preference, but on a longer-duration task such as book reading may surface as an irritation.

Color of print and background. Black print on a white background is much more legible than the reverse. Printed material on the whole is perceived better as the brightness contrast between print and paper becomes greater. Reading rates are the same for colored ink on colored paper, provided high contrast is maintained.

Tinker’s work, while focused often on single variables, recognized that typography was a system of many inter-related variables. If only two or three of those variables were degraded from optimum settings, he found that this was accompanied by a rapidly-increasing loss in legibility.

6.6 The book as a “system”: Tschichold and Dowding

To truly understand the typography of the book as a “system”, we have to examine the work of specialists in book typography. It is here that analysis often runs into difficulties, since many typographers and designers speak in a language with its own esoteric terms.

The best typographers I have met or read, though, all agree on one point: the purpose of typography in a book is to become invisible. We can re-state this in more scientific terms as “making the reading process as transparent as possible for the reader”. Good typography is meant to pass unnoticed, although achieving it requires an astonishing attention to detail that the lay person can easily misconstrue as “unnecessary fussiness” or even “just art”.

Typographers and designers talk often in terms such as the color of a page (a uniform grayness in which no single word, letter or space stands out from the whole). “Nothing should jump out at you” is another frequent assertion, or “Typography should honor the content”.

What do these unscientific terms really mean? For a detailed analysis of book typography, the reader can do no better than to read in its entirety The Form of the Book, by the eminent 20th Century typographer .

6.7 Tschichold: The rebel who recanted

Tschichold’s own history is of great value in the search for readability in books. He was one of the “young rebels” who in the 1920s and 30s led the “revolution” in typography that was meant to overthrow centuries of hidebound tradition.

Tschichold was one of the leading lights of the “New Typography” of that time, in which the rebels eschewed the conventions of the past. Serif typefaces were passé, and text was to be set ragged right, with no indents for paragraphs but instead with additional space between them.

Tschichold was such a leading light among these revolutionaries that in 1933 he was imprisoned by the Nazi Government for six weeks for his “subversive ideas”. Perhaps they wanted to make certain that the traditional “Aryan” values they believed to be embodied in the Gothic blackletter in common use in Germany and Austria at that time were not diluted by “non-Aryan” typography, taking the same attitude to “modern” typography as they took to modern art.

Tschichold fled to Switzerland with his wife and infant son, and spent most of his life in that country until he died in 1974. He spent two years in London at Penguin books, which was at that time the largest publisher of paperback books in the world.

Within two years of leaving Germany, Tschichold began to step back from his revolutionary theories. “The Form of the Book”, a series of essays published in 1975, a year after his death, shows that in the course of the next 30 years he had fully recanted. It is of all the more value because Tschichold clearly took none of the “print conventions”, as they have been described elsewhere, at face value. All were rejected, and then returned to in the light of experience.

Tschichold’s writings are especially valuable because he expressed good book typography and how to achieve it in extremely scientific terms. His work is summarized below, although it contains far more detail which cannot be ignored if good typography is to be achieved on the screen.

6.8 Achieving good typography

“Perfect Typography depends on perfect harmony between all of its elements. It is determined by relationships or proportions, which are hidden everywhere; in the margins, in the relationships of the margins to each other, between leading of the type and the margins, placement of page number relative to type area, in the extent to which capital letters are spaced differently from the text, and not least in the spacing of the words themselves.

Comfortable legibility is the absolute benchmark for all typography, and the art of good typography is eminently logical.

Leading, letterspacing and wordspacing must be faultless.

The book designer has to be the loyal and tactful servant of the written word.

Though largely forgotten today, methods and rules on which it is impossible to improve have been developed over centuries. The book designer strives for perfection which is frequently mistaken for dullness by the insensitive. A really well-designed book is recognizable as such only by a select few. The large majority of readers will have only a vague sense of its exceptional qualities.

Typography that cannot be read by everybody is useless. Even with no knowledge, the average reader will rebel at once when the type is too small or otherwise irritates the eye. (We may not know about Art, but we know what we like!)

First and foremost, the form of the letters themselves contributes much to legibility or its opposite. Spacing, if it is too wide or compressed, will spoil almost any typeface.

We cannot change the characteristics of a single letter without at the same time rendering the entire typeface alien and therefore useless.

The more unusual the look of a word we have read – that is to say, recognized – a million times in familiar form, the more we will be disturbed if the form has been altered. Unconsciously, we demand the shape to which we have been accustomed. Anything else alienates us and makes reading difficult.

Small modifications are thinkable, but only within the basic form of the letter.”

6.9 Back to the classical approach

After fifty years of experimentation – and indeed being one of the leading lights of “innovation” and “revolution” – Tschichold concluded “the best typefaces are either the classical fonts themselves, recuttings of them, or new typefaces not drastically different from the classical pattern”.

Sanserif faces are more difficult to read for the average adult. This assertion by Tschichold that serif faces are more readable is not fully consistent with Tinker’s finding that sans serif faces are no less readable. However, it should be borne in mind that Tinker’s research was based on much shorter-duration reading tasks than the book, whereas Tschichold was speaking only of typefaces for books. Tinker’s finding that readers preferred serif faces may indicate that research with book-length reading tasks would produce harder evidence.

Beginnings of paragraphs must be indented. The indention – usually one em – is the only sure way to indicate a paragraph.

The gestalt of the written word ties the education and culture of every single human being to the past, whether he is conscious of it or not. “There are always people around offering ever-simpler recipes as the last word in wisdom. At the present it is the ragged-right line, in an unserifed face, and preferably in one size only”.

Beside an indispensable rhythm, the most important thing is distinct, clear and unmistakable form. Tschichold is talking about reading gait here.

Good typesetting is tight; generous letterspacing is difficult to read because the holes disturb the internal linking of the line and thus endanger comprehension of the thought.

Italics should be used for emphasis.

Two constants reign over the proportions of a well-made book: the hand and the eye. A healthy eye is always about two spans away from the book page, and all people hold a book in the same manner.

The books we study should rest at a slant in front of us.

6.10 Size DOES matter!

Tschichold analyzed page sizes and margins in detail, and says a proportion of 3:4 in page size is fine, but only for quarto books that rest on a table. It is too large for most print, because the size of a double-page spread makes it unwieldy. However, in an electronic book – which has no “facing pages” – this would suggest that the standard screen proportion of 3:4 would work quite well, provided it was used in portrait orientation.

Harmony between page size and type area is achieved when both have the same proportions.

Choice of type size and leading contribute greatly to the beauty of a book. The lines should contain from eight to twelve words; more is a nuisance. Typesetting without leading is a torture for the reader.

Care must be taken to make the spaces between the words in a line optically equal. Wider spacing tends to tear the words of a sentence apart and make comprehension difficult. It results in a page image that is agitated, nervous, flecked with snow. Words in a line are frequently closer to their upper and lower neighbors than to those at the left and right. They lose their significant optical association. Tight typesetting also requires that the space after a period be equal to or narrower than the space between words.

Indents are required at the start of paragraphs. So far no device more economical or even equally good has been found to designate a new group of sentences. Type can only be set without indents if care (i.e. manual intervention) is taken to give the lines at the ends of paragraphs some form of exit. Typesetting without indents makes it difficult for the reader to comprehend what has been printed.

Normal, old-fashioned setting with indents is infinitely better. It simply is not possible to improve upon the old method. It was probably an accidental discovery, but it presents the ideal solution to the problem.

Italic is the right way to emphasize. It is conspicuous because of its tilt, and irritates no more than is necessary for this function.

6.11 Leading or Interlinear spacing

Leading is of great importance for the legibility, beauty and economy of the composition.

Poor typesetting – set too wide – may be saved if the leading is increased. But even the most substantial leading does not abrogate the rules of good word spacing.

Leading in a piece of work such as a book depends also on the width of the margins. Ample leading needs wide borders in order to make the type area stand out.

Lines over 26 picas almost always demand leading. Longer lines need more because the eye would otherwise find it difficult to pick up the next line.

A fixed and ideal line length for a book does not exist. 21 picas is good if eight to ten-point sizes are used. It is not sufficient for 12 point. Nine centimeters looks abominable when the type size is large, because good line justification becomes almost impossible.

Widows – single words or worse, hyphenated parts of words, which appear as the first line on a page – are unacceptable. The typesetter needs to look at preceding pages – perhaps all the way back to the start of the chapter, where there is generally additional space between chapter heading and text.

Pure white paper is cold, unfriendly and is upsetting because, like snow, it blinds the eye. Lightly tinted paper is superior. This suggests that the screen – which is incapable of displaying snow-white – has some hope. It may even be desirable to use a color tint. It is not only unnecessary, but runs counter to good readability, to try to achieve the binary contrast effect of pure black type on pure white paper.

Tschichold’s assertions are set out in a logical manner. He makes it clear that creating easily-recognizable word-patterns, by attending to the shapes of letters, then to the way in which they are assembled into easily-recognizable words, is at the core of good book production. The remainder is the task of presenting these words to the reader in a smoothly-flowing stream.

The devil is in the details. Some letter pairings in words, for example, do not fit well together unless the pairs are “kerned” or moved closer together to remove some of the white space, which would otherwise tend to break up the word.

Ligatures are another method of grouping letters more closely together to harmonize two or even three-letter combinations: “ff” and “ffl” being two examples.

6.12 Dowding: FINER POINTS in the spacing and arrangement of TYPE

Another fine logical analysis of the science of typography is given in “Finer Points in the Spacing and Arrangement of Type” by . Dowding had a long career as typographer to many British publishers; he was also an instructor in typographic design at the London College of Printing for over 20 years.

Most of the book is devoted to the setting of type for continuous reading (i.e. the book).

“Typography consists of detailed manipulation of many variables which may not be immediately obvious, but which in sum add enormously to the appearance and readability of text” – almost an exact echo of Tschichold.

Even the most carefully-planned design will fall short of perfection unless unremitting attention is paid to these details, “minor canons” which have governed both the printed and written manifestations of the Latin script from the earliest times.

Disturbingly large amounts of white space in the wrong places, i.e. between the words, is the antithesis of good composing and sound workmanship.

Consistently close spacing between words, and after full stops, secures one of the essentials of well-set text matter – a striplike quality of line.

An excessive amount of white space between words makes reading harder.

More interlinear spacing can mitigate the effect of carelessly-spaced lines, but a combination of well-spaced lines and properly-spaced words magnifies the beneficial effect of both.

Why does close spacing work?

6.13 Spacing and recognition

A child learns to read by spelling out words, at first letter by letter, then syllable by syllable and afterwards by reading individual words one at a time. But the eyes of the adult reader take in a group of words at each glance.

Although quite wide spacing is desirable between the words of a child’s book and ample leading is also necessary between the lines (reducing progressively as the child becomes older and more adept), in settings not intended for young children great gaps of white between the words break the eye’s track.

The “color” or degree of blackness of a line is improved tremendously by close word-spacing. A carefully composed text page appears as an orderly series of strips of black separated by horizontal channels of white space.

In slovenly setting the page appears as a gray and muddled pattern of isolated spots, this effect being caused by overly-separated words (the same spottiness is noticeable in most typefaces when read on the computer screen).